How we created cluster rendering

Workflows

Disguise Platform

Enabled by Disguise technology, cluster rendering allows you to scale content endlessly - without being limited by the capabilities of a single GPU. James Bentley, our Head of Software Engineering in R&D, takes us behind the scenes of our cluster rendering development, and explores the impact this technology has had on film, broadcast, projection mapping and live events.

What is cluster rendering?

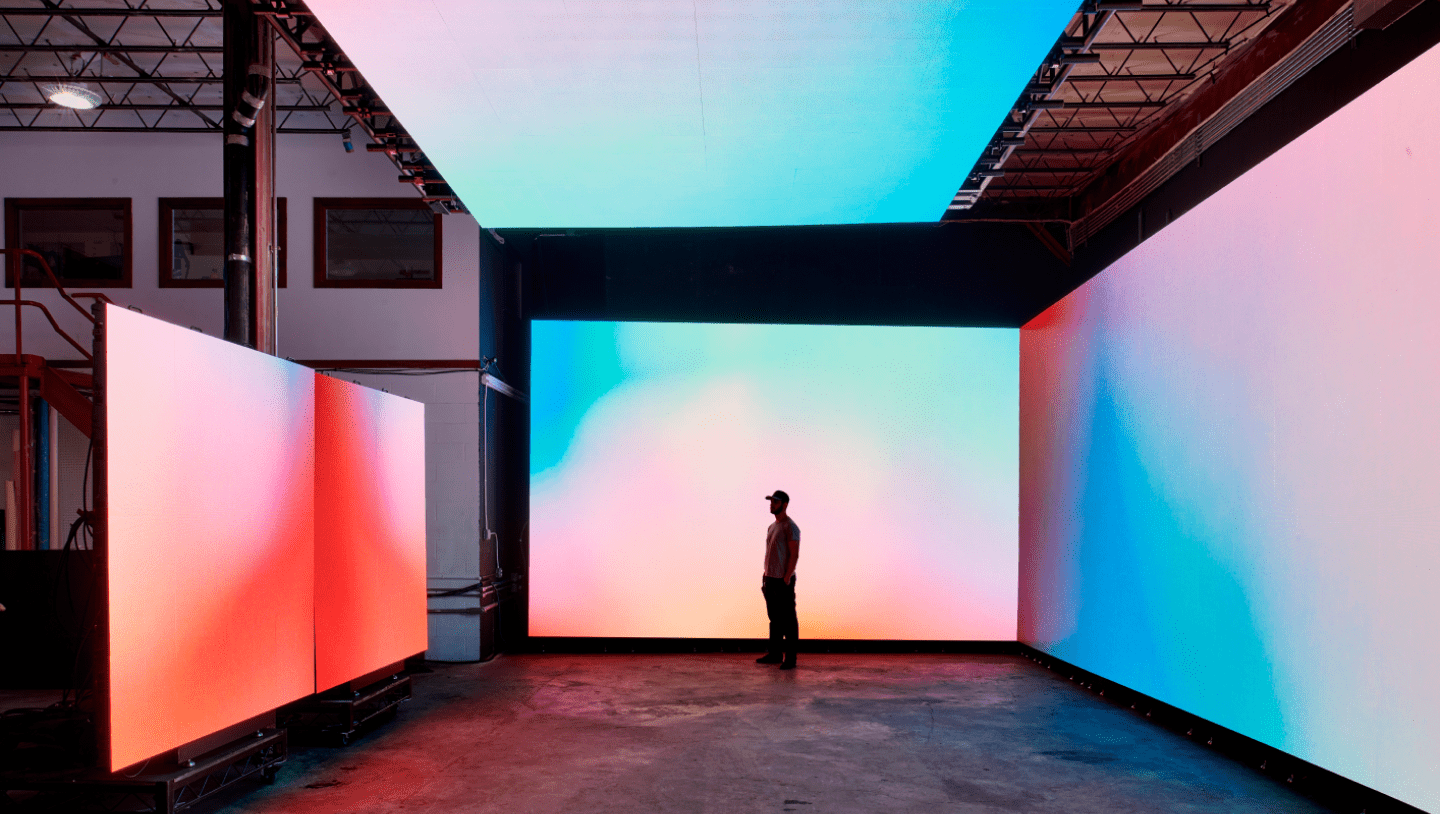

What if you could shoot on a virtual production stage so huge its LED walls would cover an entire stadium? With cluster rendering, this is not only possible but easily achievable. Sitting within the Disguise core software, cluster rendering lets you split pre-rendered and real-time content into segments, so that each slice can be rendered by a different Disguise render node.

“With the rendering workload shifted across machines, you’re no longer limited to a single GPU. Real-time environments can be displayed at infinite resolution for higher-quality visuals than ever before, “ says Bentley.

“They can also be displayed on a massive scale, with a limitless number of LED screens or projectors. Whether you want your musicians to play live with giant augmented reality characters or use virtual production to display a visually complex scene in real time, cluster rendering will make it happen.”

The goal behind cluster rendering

Our two-year journey into developing cluster rendering began in partnership with Epic Games. From the start, our goal was to produce a fully productised solution that would work out of the box and allow Unreal Engine visuals to be rendered at any scale, at a higher standard than ever before.

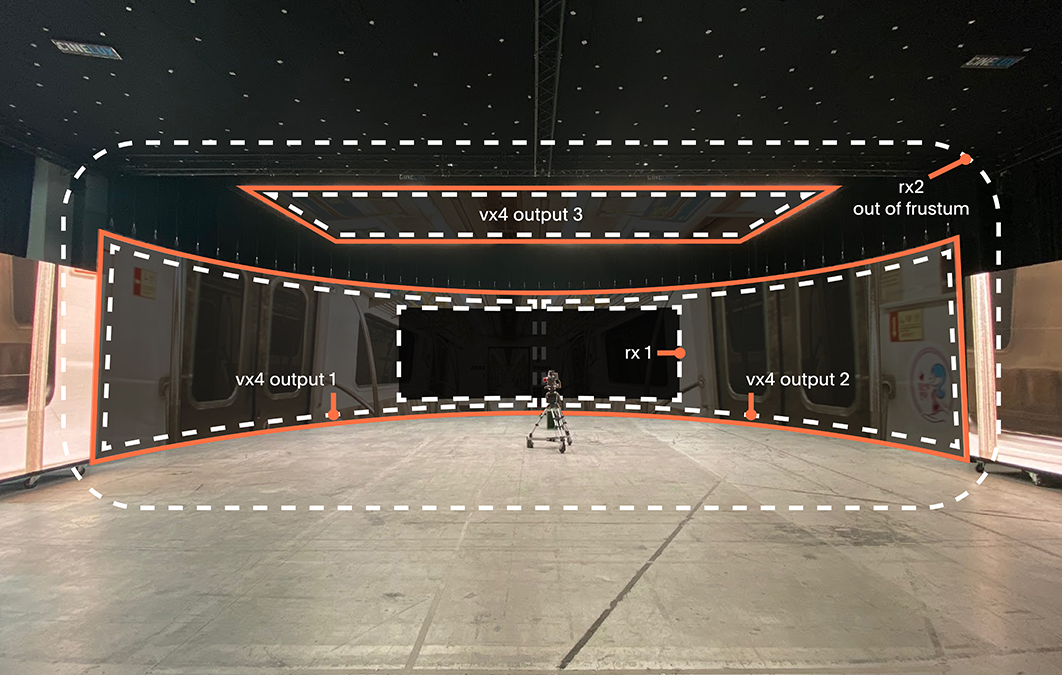

To do this, we needed to allow users to split their Unreal Engine content into slices, with each able to be rendered by separate Disguise machines paired with our Designer software (version r18 or higher). We decided these slices could be the different elements of a real-time render, like mountains or clouds for example. Alternatively, renders could also be split into different sections of an LED screen.

By splitting the real-time content into different slices, we could ensure each was given the processing power it needed for high-fidelity results, no matter the scale of the setup.

According to Bentley, “our cluster rendering was also the first solution to allow near-linear scaling, removing the risk of diminishing the render power effectiveness if it is split across an increasing number of machines. That means the impact of adding a fourth or fifth render node to the cluster is practically equal to that of adding only one or two.”

Developing cluster rendering

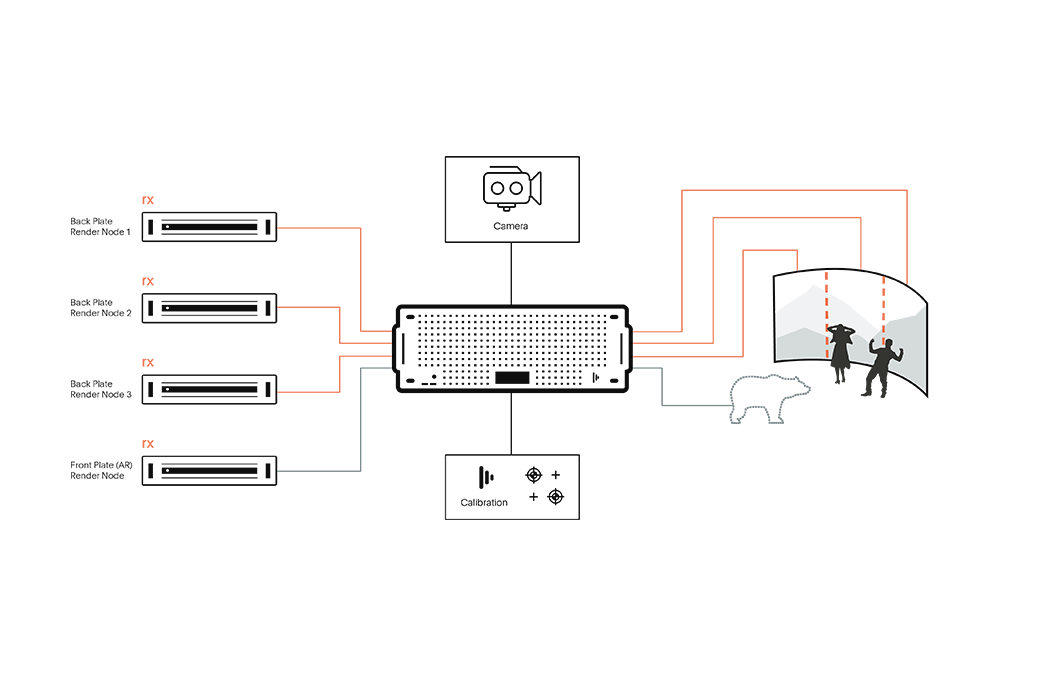

“We wanted our solutions to take the legwork out of setting up a cluster. From configuring multiple render nodes and ensuring every image is synchronised to content distribution and latency compensation – we needed our hardware and software to do it all automatically,” says Bentley.

To do this the team needed to build a multi-machine management tool to allow us to control many machines as if they were one. They did this in four parts:

First, they developed a way of transporting images from a 3D rendered scene over a network connection quickly, efficiently, reliably and without compression artefacts.

Next, they developed a way of compositing these frames back together on the receiving machine for final output.

The third step was finding a way of managing a large number of machines so they rendered the correct parts of the 3D scene, and could be synchronised as one.

The engineering process

Our engineering process was highly iterative and split into four distinct steps:

1. Streaming video

“We started by getting a stream of video from one machine to another using a simple network video protocol called NDI. In parallel, we began working on creating RenderStream, our proprietary method of controlling remote rendering nodes so that they could send us precisely the images we needed when we wanted them,” says Bentley.

Learn more about Disguise RenderStream

To do this, the team had to write CUDA and OpenCL decoders and encoders, then test them in multi-threaded environments. They based this on the premise of sending frame requests with particular camera and world parameters from a controller machine, then allowing each of the render nodes to respond with an encoded video frame.

2. Stitching video

Once these tools were working, the team needed to integrate them together so users could manually run uncompressed streams as well as NDI compressed streams. To do this, they wrote their first video stitchers and timing code to allow them to combine multiple slices of a single video frame into a final composited output.

3. The Asset Launcher

According to Bentley, “at this stage we shifted our focus to creating what we call Asset Launcher, which is a system that automates the cluster rendering setup process. This means that all a user has to do is specify how many machines they want to use to render a scene. Asset Launcher then automatically assigns slices of a render to the computers, and finally starts, stops, and restarts all the machines together so the user feels like they’re just dealing with a single 3D asset instead of a cluster of renders stitched together.”

4. Ongoing optimisation

Once that was working, the team spent time optimising the pipeline. The data streams we were working with were very large files, (up to 25GB/s from each render node) and as all of the decoding and copying are done in parallel, they had to work hard to make sure everything ran quickly enough to cope with the high resolutions Disguise clients use.

“We continue to iterate on the workflow and on the user-facing APIs that allow customers to write their own integrations within 3D engines outside of Unreal Engine. At the moment we have integrations with Unity (who has their own API), Notch (bundled with the Disguise software) and we will soon also support TouchDesigner” says Bentley.

The impact of cluster rendering

Since its launch in the Disguise r18 software release in early 2021, cluster rendering, and RenderStream has been used extensively in film and broadcast, live events and projection mapping projects around the world.

Cluster rendering for film and broadcast

Cluster rendering is a game-changer in production. With the global virtual production market set to be worth a massive $6.79 billion by 2030, an increasing number of our films and television shows will be shot on an LED stage. With cluster rendering, directors no longer need to be limited by technology, as virtual production sets can handle workloads with increasing pixel counts and complexity.

For ITV’s Euro 2020 coverage, for instance, Disguise Certified Solution Providers White Light used cluster rendering to easily scale Unreal Engine scenes by adding more render nodes on a 25Gb IP network. The result was a hybrid extended reality and real-life studio space where ITV could present the match coverage in real-time.

Read our case study to learn more

As well as enabling creatives to work without compromising the scale of their vision, cluster rendering also allows virtual production environments to be of much higher visual fidelity. Often, virtual production environments are competing with the realism experienced in the real world which is difficult to render in real-time. With cluster rendering there’ll be no limit on the quality, resolution and framerate of a real-time visual.

“We’ve worked on projects with upwards of 18 compositor nodes and 50+ render nodes to achieve a creative vision,” says Bentley.

This frees filmmakers to be more agile about their production schedule: there’s no need to wait to shoot a sunset scene if, using cluster rendering, your virtual sunset can look just as good as a real one.

Cluster rendering for live events

When it comes to live events, it’s not just enough to have technology that provides spectacular content at a massive scale. Video content needs to work perfectly while appearing live: There’s no room for error.

Luckily, cluster rendering can bring benefits for this too, as live events professionals can employ backup servers to run content so no frames are dropped if one node crashes. The final compositing step allows us to apply effects in full resolution screen space, all without affecting the performance of the scenes or introducing edge artefacts (similar to vignetting). That means whether you’re in a football stadium or a festival attended by thousands, you can capture it all at any scale or resolution while also being sure that content will play perfectly every time with no dropped frames.

The flexibility of cluster rendering allows for programmers to cluster content however they wish. For example, they can make a centre screen incredibly detailed and photorealistic while applying different rendering capabilities for other parts of the show.

Cluster rendering for projection mapping

Ready for Minority Report-inspired holographic maps? Whether you’re displaying interactive galaxies for a museum or augmented reality air hockey for your next party, cluster rendering means you can display projected images onto buildings, walls, ceilings and more at any scale with added realism to match.

For Illuminarium’s “WILD: A Safari Experience” show, for example, the team combined traditional motion picture production techniques with cluster rendering to enable viewers to see real-world, filmed content in a 360º environment without wearable hardware of any kind.

Because cluster rendering removes the need for render engine configuration and synchronisation, you can rest assured that you can scale content limitlessly, without having to worry about frame accuracy, resolution and complexity of the graphics being compromised in any way. That means no matter what your vision, you’ll be able to achieve it with confidence.